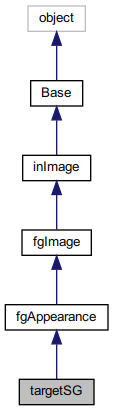

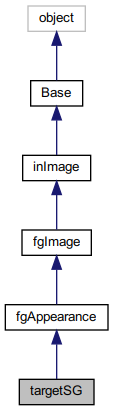

Single-Gaussian based target detection with full covariance. More...

Public Member Functions | |

| def | __init__ (self, tModel_SG tModel=tModel_SG(), Params params=Params()) |

| The targetSG constructor. More... | |

| def | calibrate (self, mode="ImgDiff", *args) |

| def | loadMod (self, filename) |

| def | measure (self, I) |

| Generate detection measurements from image input. More... | |

| def | saveMod (self, filename) |

| def | update_th (self, val) |

Public Member Functions inherited from fgAppearance Public Member Functions inherited from fgAppearance | |

| def | __init__ (self, appMod, fgIm) |

| def | displayForeground_cv (self, ratio=None, window_name="Foreground") |

| def | emptyState (self) |

| Return empty state. More... | |

| def | getBackground (self) |

| def | getForeGround (self) |

| def | getState (self) |

| Return current/latest state. More... | |

Public Member Functions inherited from fgImage Public Member Functions inherited from fgImage | |

| def | __init__ (self, processor=None) |

Public Member Functions inherited from inImage Public Member Functions inherited from inImage | |

| def | info (self) |

| Provide information about the current class implementation. More... | |

Public Member Functions inherited from Base Public Member Functions inherited from Base | |

| def | __init__ (self) |

| Instantiate a detector Base activity class object. More... | |

| def | adapt (self) |

| Adapt any internal parameters based on activity state, signal, and any other historical information. More... | |

| def | correct (self) |

| Reconcile prediction and measurement as fitting. More... | |

| def | detect (self, signal) |

| Run detection only processing pipeline (no adaptation). More... | |

| def | emptyDebug (self) |

| Return empty debug state information. More... | |

| def | getDebug (self) |

| Return current/latest debug state information. More... | |

| def | predict (self) |

| Predict next state from current state. More... | |

| def | process (self, signal) |

| Process the new incoming signal on full detection pipeline. More... | |

| def | save (self, fileName) |

| Outer method for saving to a file given as a string. More... | |

| def | saveTo (self, fPtr) |

| Empty method for saving internal information to HDF5 file. More... | |

Static Public Member Functions | |

| def | buildFromImage (img, Params params=Params(), *args, **kwargs) |

| def | buildImgDiff (bgImg, fgImg, vis=False, ax=None, Params params=Params()) |

| def | buildSimple () |

| def | get_fg_imgDiff (bgImg, fgImg, th) |

Static Public Member Functions inherited from Base Static Public Member Functions inherited from Base | |

| def | load (fileName, relpath=None) |

| Outer method for loading file given as a string (with path). More... | |

| def | loadFrom (fPtr) |

| Empty method for loading internal information from HDF5 file. More... | |

Public Attributes | |

| fgIm | |

| params | |

Public Attributes inherited from fgAppearance Public Attributes inherited from fgAppearance | |

| fgIm | |

Public Attributes inherited from inImage Public Attributes inherited from inImage | |

| Ip | |

| processor | |

Public Attributes inherited from Base Public Attributes inherited from Base | |

| x | |

| Detection state. More... | |

Detailed Description

Single-Gaussian based target detection with full covariance.

Interfaces for the target detection module based on the single Gaussian RGB color modeling for the target. Effectively, target object pixels are assumed to have similar RGB value, modeled by a single Guassian distribution. Test images are transformed, per the model, into a Gaussian with uncorrelated components by diagonalizing the covariance matrix. Foreground detection is done by thresholding each component independently in the transformed color space (tilt):

|color_tilt_i - mu_tilt_i| < tau * cov_tilt_i, for all i=1,2,3

tau is a threshold parameter.

The interface is adapted from the fgmodel/targetNeon.

Constructor & Destructor Documentation

◆ __init__()

The targetSG constructor.

Args: tModel (tModel_SG, optional): The target model class storing the single Gaussian model statistics.\ Defaults to tModel_SG(), which is an empty model and requires further calibration. params (Params, optional): Additional parameters stored as the targetSG.Params instance. Defaults to Params().

Member Function Documentation

◆ buildFromImage()

@brief Instantiate a single-Gaussian color target model from image selections @param[in] img The image to be calibrated on @param[in] nPoly(optional) The number of polygon target areas planning to draw. Default is 1 @param[in] fh(optional) The figure handle for displaying the image to draw ROI

◆ buildImgDiff()

[summary]

Args:

bgImg (np.ndarray): The background image

fgImg (np.ndarray): The foreground object image

vis (bool, optional): If true, will visualize the extracted foreground region

ax (matplotlib.axes.Axes, optional): The axis for visualizing the extracted foreground region. Defaults to None, which means will create a new figure

params (Params, optional): [description]. Defaults to Params().

Returns:

det [targetSG.targetSG]: A targetSG instance

◆ buildSimple()

|

static |

Return a targetSG detector instance given a set of target pixels

◆ calibrate()

| def calibrate | ( | self, | |

mode = "ImgDiff", |

|||

| * | args | ||

| ) |

Calibrate the stored app model This permit re-calibrate the stored appearance model, which might be uninitialized or outdated Now will simply update

◆ get_fg_imgDiff()

|

static |

Use the image difference to get the foreground mask

Args:

bgImg (np.ndarray, (H,W,3)): The background image

fgImg (np.ndarray, (H, W, 3)): The foreground image

th (int): The threshold for the image difference map

Returns:

mask [np.ndarray, (H, W)]: The foreground mask

◆ loadMod()

| def loadMod | ( | self, | |

| filename | |||

| ) |

◆ measure()

| def measure | ( | self, | |

| I | |||

| ) |

Generate detection measurements from image input.

Base method really doesn't compute anything, but will apply image processing if an image processor is define. In this manner, simple detection schemes may be implemented by passing the input image through the image processor.

Reimplemented from inImage.

◆ saveMod()

| def saveMod | ( | self, | |

| filename | |||

| ) |

◆ update_th()

| def update_th | ( | self, | |

| val | |||

| ) |

Member Data Documentation

◆ fgIm

| fgIm |

◆ params

| params |

The documentation for this class was generated from the following file:

- /local/source/python/detector/detector/fgmodel/targetSG.py